Some thoughts on minimal computing, or my slow evolving 2021 side project

28 Dec 2021

Tags: os, assembly, x86, macos, virtualization

Back when we were in lockdown earlier this year, I started doing a bit of exploring of computer history. As a lapsed Mac users I went back and read some programming manuals from they original heyday of the classic Mac, looking at System 6 through to System 9, seeing how it evolved (and failed to evolve) as the computing world moved forward. This covers a time when we went from single-tasking to multi-tasking, from 68K to PowerPC, and from the lone computer to the Internet. It was a fun time, and it was a nice reminder of where we came from, and reminded me how much of modern computing I take both for granted and as a given.

I remember whilst reading one of the first books, learning about how memory was managed in the the early Mac versions, and I started feeling instinctively uncomfortable because here was the operating system messing around with the application's memory but there was no locking mechanism to prevent both parties trying to access the same structures at the same time, a real issue in modern programming. I've got so used to dealing with concurrent and event driven programming that I forgot there was a time when computers just did one thing at a time! The reason they didn't need any locking mechanisms whilst the operating system reworked the memory map of the application is that only one thing could run at a time - CPUs had just one core, and there wasn't any pre-emptive task switching. Either the application was in control, or the operating system was, and hand over only happened when the current active party was ready to do so. Crazy to think now, but that all felt very normal for personal computers back in the day.

In fact, looking back at systems from those days, the term "operating system" feels almost overblown, because once an application was running the operating system was really more of a set of common libraries that drew few things like windows and menus for you or let you access disks, but really the application just was running all this common code held in ROM, it wasn't switching over to an active running kernel as we'd assume today. It's almost like unikernel programming back before we needed a name for it as something special and unusual.

Going back through these old manuals was an interesting reminder of where we've come from in terms of personal computer development. And studying what was to be a dead-end of an operating system was probably more educational than had I say chosen to revisit Windows of the same era. System 9, the last version of the classic Mac operating system before they effectively just swapped it all for NeXTSTEP, was a troubled and disturbed thing, at least based on reading the programming manuals I found from Apple of the time: yes, there were threads to let you do what we'd consider proper concurrency today, but none of this applied to the main thread of your application, that was still cooperatively multitasked like we were back in the 80s: you can feel them trying to turn this big ship around whilst it is slowly capsizes under its own weight thanks to having to support legacy applications.

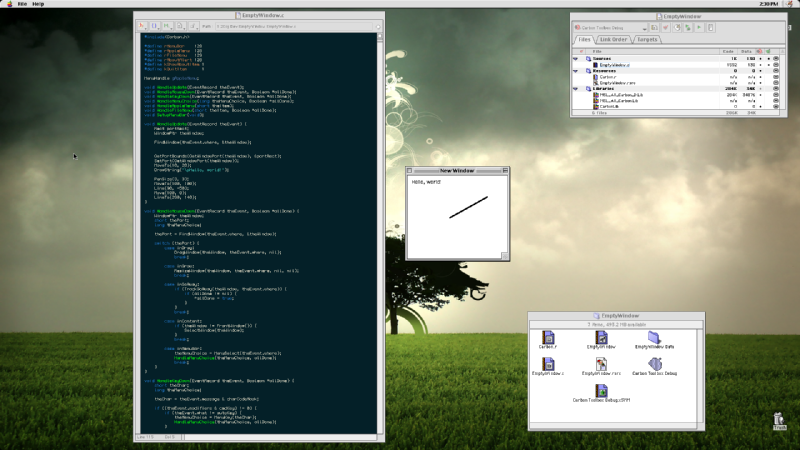

At the same time as being a fascinating bit of historical study, despite my frustration with a lot of where computing has ended up, it was also a useful reminder that progress isn't necessarily a bad thing: I did write some simple hello world code using Metrowerks Code Warrior on an emulated Mac (I do have some actual old Macs, but they're stored away), but as with all computer nostalgia I very quickly get bored because I can't do much with it that I'd use a computer for today, mostly due to lack of networking and modern cryptography libraries.

But still, if you're inclined to see where we've come from, then I can highly recommend spending an afternoon or two reading some programming manuals from the 80s and 90s for your favourite platform of the era.

By my own description, I'm not interested in retro computing - I do very quickly get past the nostalgia and feel the impracticality for anything I'd want to use a computer for today - so then why did I spend all this time researching how to program these elderly platforms?

I was in part inspired by looking at all the fun people are having with "fantasy" old computers, such as the Pico-8 virtual console platform, orUxn which emulates a simple stack-based processor (if you want to learn more about Uxn then there is a nice little tutorial here which gets you up to speed quite quickly). Both these platforms are based on the emulation of a computer platform that never existed (an endeavour that got me my PhD now I think about it...), and so I think they appeal to me because they mostly avoid the pitfalls of being just a place for wallowing in computer nostalgia, and exhibit the fun that there is in building software for a more limited domain. I follow a bunch of people building software for Uxn on social media, and there's just a sense of fun and enthusiasm there for building software that I think is interesting and contagious, particularly as a way to try and make low-level computers more accessible, as they were back in those early days of the personal computer era.

Modern computers can do a lot of really cool stuff, but there's a lot to it, which can easily get in the way of the learning/reward feedback loop that I think is essential for helping people pick up understanding computers as a pass time. I used to think that, despite my personal distaste for the language, Javascript programming was for a while the new easiest path to fun programming, but trying to start a Javascript project where you don't first have to understand how to install the tool (nvm) that'll let you install the tool (npm) to let you install the library you want to use, and I think those days might have gone.

Similarly, microbit or arduino programming is perhaps the closest thing to having a minimal computer to play with, but the jump from a microbit, where there's no screen or "conventional" input device to a raspberry pi where you're back on top of linux feels like quite a lot of computing has been skipped over, and specifically the bit I find interesting to make more explorable.

I'm also interested in what I've come to refer as a notion of "minimal computing". In the rush to add more features to our systems we've added so much complexity it is impossible for the average user to understand what is going on, and unfortunately the software we use is often written by corporations that will happily leverage that fact to help them make money from your actions.

If you'd like an eye opening experience on this and own a Mac, then get yourself a copy of Little Snitch (they have a free trial) - you quickly discover that, to pick an example at random, Autodesk Fusion 360, a CAD tool, will contact Facebook, Google, and a dozen or so other analytics and marketing companies every time it launches, and I'd have to train Little Snitch to block them every time there was an update. To be clear Autodesk are not exceptional in doing this, it's just one example that sticks in my head as their frequent updates made me have to retrain Little Snitch each time because they didn’t sign their binaries properly, but it should be exceptional that all this is happening behind the back of the regular user on their own computer.

The operating systems that we use daily are based on design principles from decades ago, and they try to protect you from external aggressors, but have no support to prevent software that you've accepted to run from doing things you might not want it to do.

Simpler systems don't inherently stop this, but I like the idea of taking a step back from the systems we have ended up with and questioning what does and doesn't need to be there, or what could we change to address these things I find abhorrent.

Over time we've ended up with a small number of dominant operating systems and these continue to evolve along a particular path that is a mix of what is practical, what the market wants, and limited time and budget constraints. But along the way to here there have been lots of other potential branches we might have taken: some have been tried and failed, some have been tried but were dropped for other pragmatic reasons around engineering effort and wanting to try something else, and others yet failed to get momentum.

All of which is a round about way of trying to say that we we have today isn't necessarily the best, it's just what the current tech/capitalism system can afford for us, and so I find it interesting to go back and look at some of the alternative views of the future from back then and see if they provide us with an alternative to where we find ourselves today, where I feel our computers are becoming less our own.

At the same time I don't want to go back to how things used to be - I'm not about to become the Townsends of tech - in general computing is more useful to more people today than it ever has been, which is something to celebrate. But that doesn't mean we have the best solution for users, and in looking to improve I find it easier to re-evaluate the past than imagine something totally new.

A final thread on this, before I try to draw this into some vague conclusion, is to acknowledge there is a bunch of necessary complexity in modern computing, and the one sour note to me about some of the simpler computer initiative people I follow online is watching them push simplicity at the expense of some of the complexities that make computers more accessible and part of the messy reality of every day life.

The most obvious example of that which I've hit up against is secure transport at the network layer. This is something that is both hard to implement (needs cryptography libraries) and awkward to maintain (key and certificate management is not a task anyone really likes), and so I see quite a few voices saying we should get rid of it from endevours like Gemini. Gemini is a sort of minimal browser that does away with CSS and Javascript, going back to when the web was more a collection of documents rather than apps. I can certainly see the appeal of that for a lot of what I use the web for (e.g., like this page). But Gemini (sensibly in my opinion) still requires secure connections, which makes it harder to build clients and adds overhead to hosting servers, and so people rebel against this saying it's not made things simple enough.

For example, I got into a debate on this recently where someone I follow took the position that "there's nothing sensitive on my blog so no one needs TLS for this", which I think makes assumptions about the reader that you can't make. What you think is normal might not extend to other cultures, and so say someone trying to learn from your blog in places where education is restricted your content suddenly becomes much more problematic than it was to you. My concern is that without TLS we'll end up restricting the simple web to those who have an easy life, and cut out the marginalised - which is exactly where we should be targeting a web without tracking and minimal resource requirements!

Perhaps I need to refine "minimal computing" to "minimal viable computing". I don't think needing TLS is in any way at odds with simple computing, but I do think it needs the lines redefined. No one thinks twice about using TCP for network traffic, despite that being quite a complex thing to implement, but that's because it's nicely hidden away behind the sockets layer for most programmers. If we could move the normal programming stack so that TLS was less obvious to the programmer would people still object?

If at this point, you were looking for a grand unifying theory to tie all this together, I don't have one, at least not yet. I have lots of ideas rattling around my head that don't really form a coherent answer. I've been left with this large incoherent map of thoughts that I feel are coalescing around some notion of "minimal computing", aided in part by a long conversation with a friend as we smashed rocks on a hillside for an afternoon in the summer.

Both of us were trying to consider why computing hadn’t turned out the way we had hoped when we were younger, but both coming at it from quite different angles: Joe was questioning how we structured larger networked systems, which both contrasted nicely with my lower level interests.

Whilst we didn’t reach any earth shattering conclusions, it was nice to be challenged on some of my thinking, which inspired me to want to start to get my hands dirty again to provide a platform for answering some of these questions that were floating around my head, and to give me something to perhaps crystalise some of these ideas around.

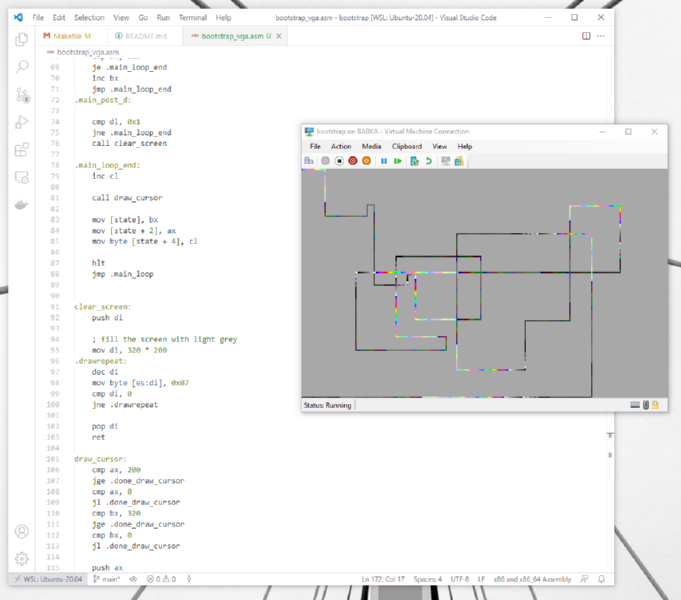

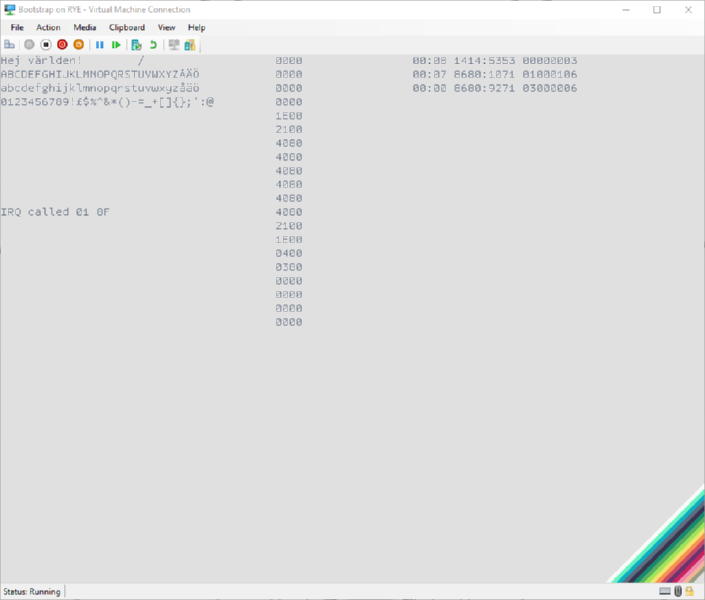

To that end I've slowly spent the last few months finding time to play with the fantasy computer that's built into every PC: the 8086 and it's decedents. If you have a PC that uses a traditional BIOS, then when you power on the computer the system likes to start with the pretense that the last few decades never happened (as if we could all be so lucky at times). Your computer will start as if it's an 8086 just like in the late 70s: 16 bit registers, and 1MB of RAM max. It'll copy the first 512 bytes from the start of the boot disk into memory, and it'll just start executing that. And, that's a fun place to start if you want to try your hand a low level systems: you can get a lot of mileage out of 512 bytes and a few 16 bit registers, particularly because VGA graphics are mapped into memory, so you can just program pixels directly. I very quickly made a snake game like thing for instance, just using bootloader code:

Once you've pushed the limits of playing within those initial confines, you can convince the processor that some progress is allowed, and climb up into 32 bit mode, giving you access to more CPU features and memory, you can work out how to load things from the disk beyond those initial 512 bytes, and so forth.

A couple of things make this sort of exploration more fun to me personally than using Pico-8 or Uxn. Firstly, it’s slightly more “real”, in that the code I generate this way could boot on a real PC without any older operating system (at least, any PC that uses BIOS rather than the more modern UEFI system). It’d be fun one day to get an older Thinkpad and get it booting my code. Secondly, before I get to the stage of using a real computer, it's actually very easy to code raw x86 like this thanks to modern virtualisation systems: here my code is running using Hyper-V, the virtual machine software built into Windows, but you could just as easily do so using VirtualBox, or a PC emulator like Bochs. Armed with one of these, a copy of the free Netwide Assembler (NASM), and your favourite text editor you can get started messing about on your own retro computer.

I do wonder if with a little effort one could make a nice simple front end to present Hyper-V more like Pico-8 or Uxn, hiding away the somewhat intimidating management console Microsoft provides, and automating the creation of disk images to feed it that needs some command line knowledge. I guess that it'd be a pretty niche thing, given that Hyper-V is only available in Windows Professional editions, and I have enough side projects as it is.

Embarrassingly, despite having written assembly language code for both ARM and PowerPC in the past, I'd never done so for x86, the process that powers most personal computers and has done for a long time. I was always put off learning x86 vs simpler processors like the ARM or PowerPC because x86 seemed to carry a lot of legacy baggage with it, but ironically that's exactly why it's become a fun playpen for me to start working out some of these ideas, being able to slowly target the different parts of its history that all live around in there.

As I close the year, my little exploration of x86 is ongoing still: it now pulls the CPU up into 32 bit mode and hsa a minimal "file system" to let me get fonts loaded so it can do some text rendering. Behind the scenes I’ve learning about the memory models and task models of x86 as I move towards getting it to do something more than just “boot”.

If you'd like to do a similar exploration then I can heartily recommend this book by Lyla B. Das which walks you slowly from the 8088 and 8086 days, and up through to the Pentium. It stops before the platform makes the leap to 64 bit, but there's plenty to get your head around in just the 8086 and 80386 days, and I found this book did a good job of walking you through it. In particular it complements the kind of direct detail found in wiki.osdev.org, which is another wonderful resource, by giving you a broader picture whilst still touching the detail.

So far my x86 efforts have been mostly a bit of light fun to let me reconnect with low-level code writing for the first time in a while. I’ll keep plugging away at this in 2022, but at some point I will try focus it back on the broader issues I highlighted earlier. Which of those I tackle first will probably be a function of what walls I hit first and where I find x86 lends itself to playing. What I want to avoid doing is getting sucked into just writing yet another generic kernel - as fun and valuable as that may be.

One direction I might try to take it down is having a hybrid system where applications are developed like those old 90s Mac applications I referred to earlier, but kept simple by pushing the shared hard computing into say other VMs that are shared resources, almost like other devices. I suspect I can have fun in that vein just leaving it in Hyper-V for now and letting Windows do the heavy lifting for networking, Wifi, cryptography, etc. allowing the VMs freedom to be fun playgrounds.

I'm also having fun using segmented memory, the old and now abandoned memory architecture that Intel tried to run with that got dropped in favour of building modern computers computers like they were minicomputers from the 70s with paging. Computers have so many resources these days, what other avenues of structuring my operating system does that open up if I run a lot of minimal environments on a maximum machine like any computer I've recently bought? And whilst x86 has now dropped segmentation, thanks to projects like RISC-V, an open source CPU project, if I wanted to go more down this avenue I could just create a custom RISC-V processor with segmentation.

At the other end of the scale I am keen to play with the UI to try make the user more aware of how the computer is behaving, and making the user subtly aware of what's going on in the background of their computer. With old spinning disks it was subtly obvious when your computer was getting stressed out as you could hear it - with SSDs that ambient information stream is lost. I want my little playpens to make it obvious if one is using more power than the other, or if one is opening network connections when I don't expect it to.

There’s a lot in there, and I’m sure I’ll just get to scratch the surface on most of it. But I do think it’s an increasingly interesting area to plug away at ever time I see that a computer is becoming less of a personal computer and more of a device other companies let you use in ways they approve of.

I remember many years ago seeing Cory Doctorow give a talk on how there was a war on the general purpose computer, and at the time being somewhat dismissive of the premise, but I feel now this is a motivator to explore in this area and why I take joy in watching others do so, be it via similar endevours to mine, or playing with tools like Uxn. It’s nice to find there is an intersection between making low level computing fun/accessible and trying to convince myself there is hope to regain control of the general puprose computer.